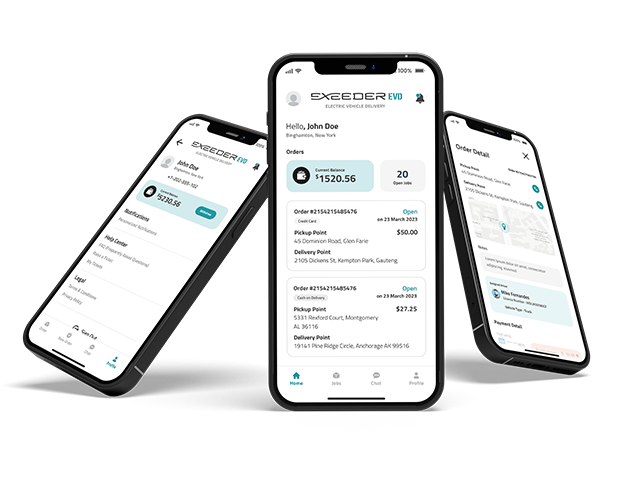

Crafting Smarter,

Faster Digital Journeys

Empowering businesses of all sizes, we’ve successfully delivered over 500 advanced software solutions since 2015. From HIPAA-compliant practice management tools to AI-enabled HRMS, we help you achieve your goals faster.

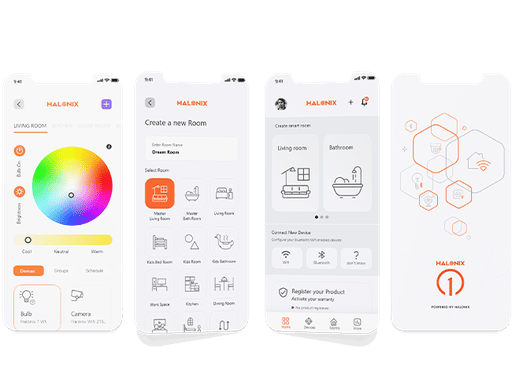

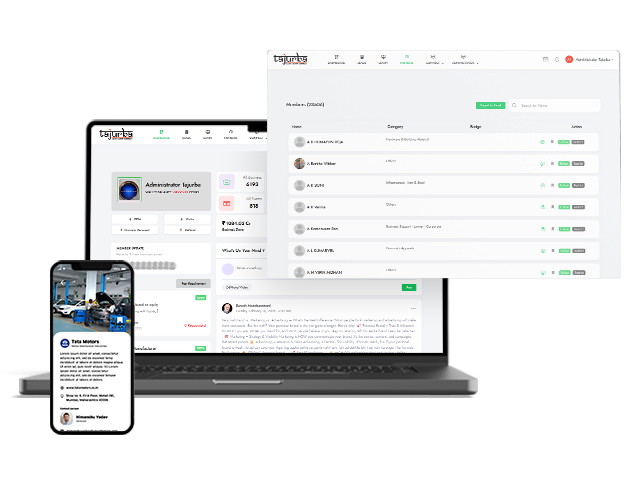

Transforming Lives Through

Intelligent Applications

Trusted by 100+ global businesses, we design digital products that improve lives and create a competitive edge. Whether it’s modernizing legacy systems or building IoT-powered solutions, we deliver excellence every time.

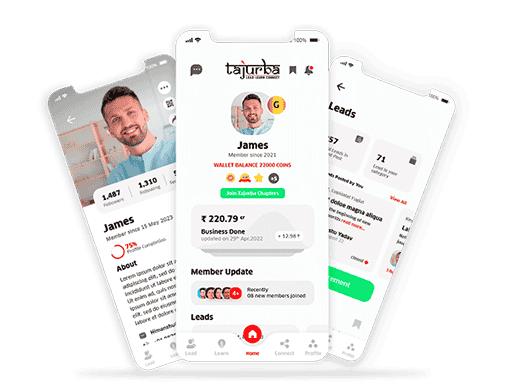

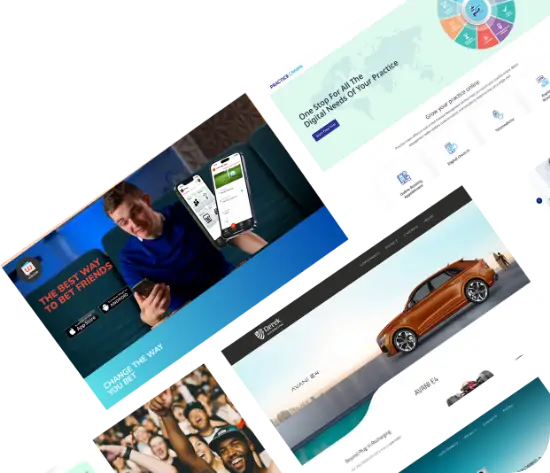

Driving Growth With

Tailored Technology

From ideation to deployment, we transform your vision into impactful products that fuel growth. Be it business networking platforms or online games, our solutions drive real results for startups and enterprises alike.

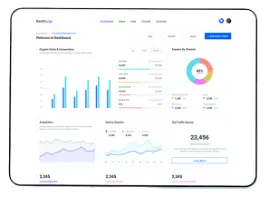

Building Smarter,

Faster Digital Platforms

With expertise in SaaS development, we’ve earned the trust of 100+ global brands. Our custom software solutions and exclusive remote team model enable smarter decision-making, effective sprints, and scalable innovations for operational excellence.

.png)

The goal of BizBrolly as an enterprise is to have customer service that is not just the best, but legendary

The goal of BizBrolly as an enterprise is to have customer service that is not just the best, but legendary